DeepSeek Statistics, Fact, Users, & Performance Benchmarks

Share

Share

Get a quick blog summary with

DeepSeek, an emerging force in AI-driven search and analytics, has rapidly gained traction since its inception.

With advanced machine learning models and a growing user base, it aims to redefine how people access and interpret information.

But just how big is DeepSeek in the AI landscape?

How does its growth compare to industry leaders, and what trends are shaping its adoption? This curated article dives into DeepSeek’s latest statistics, offering insights into its user base, market presence, and the key factors driving its rise in the competitive AI sector.

Key DeepSeek statistics

- DeepSeek was founded in 2023 by Liang Wenfeng.

- The company operates with a team of fewer than 200 employees.

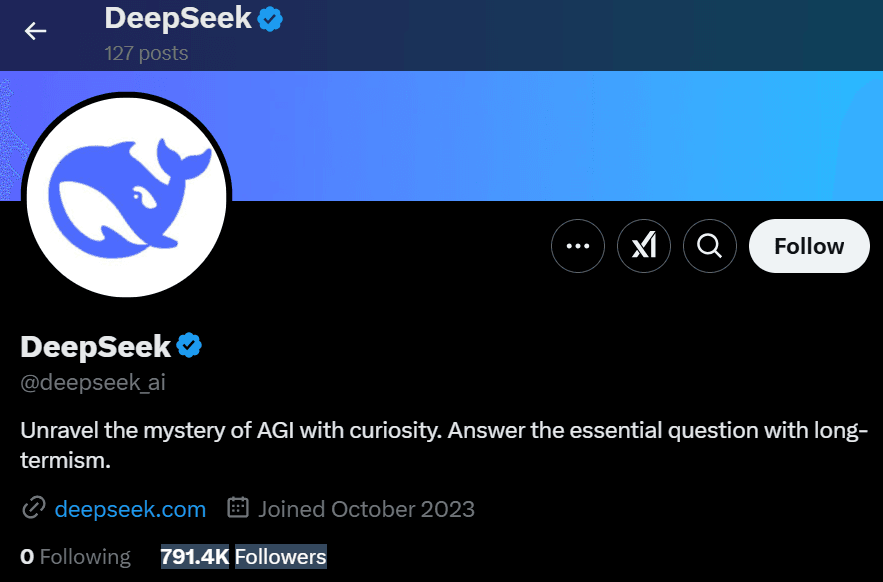

- As of January 2025, DeepSeek has 791.4K followers.

- In the last 6months, the company’s organic search traffic increased by approximately 584.21%.

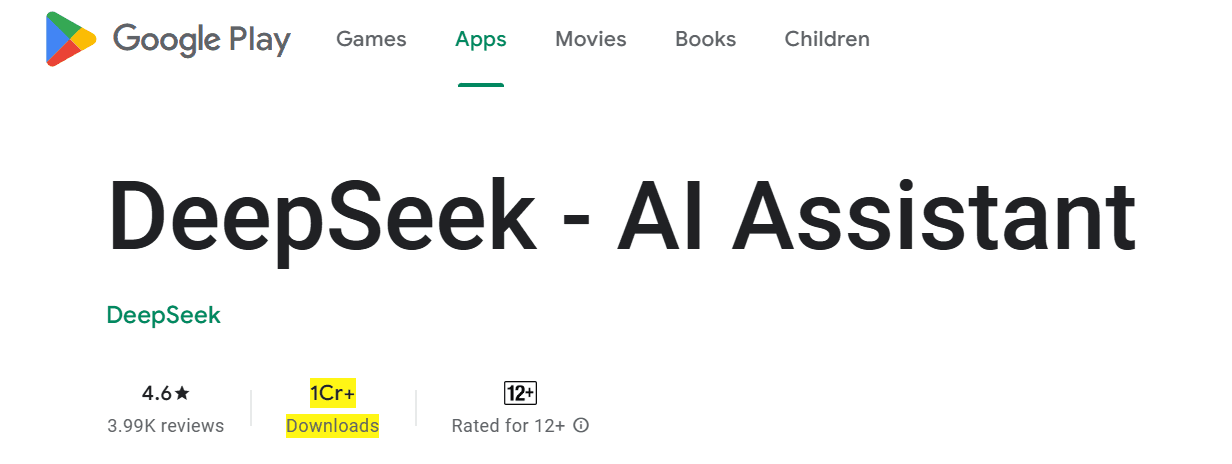

- DeepSeek's chatbot app has more than 10 million downloads on Google Play.

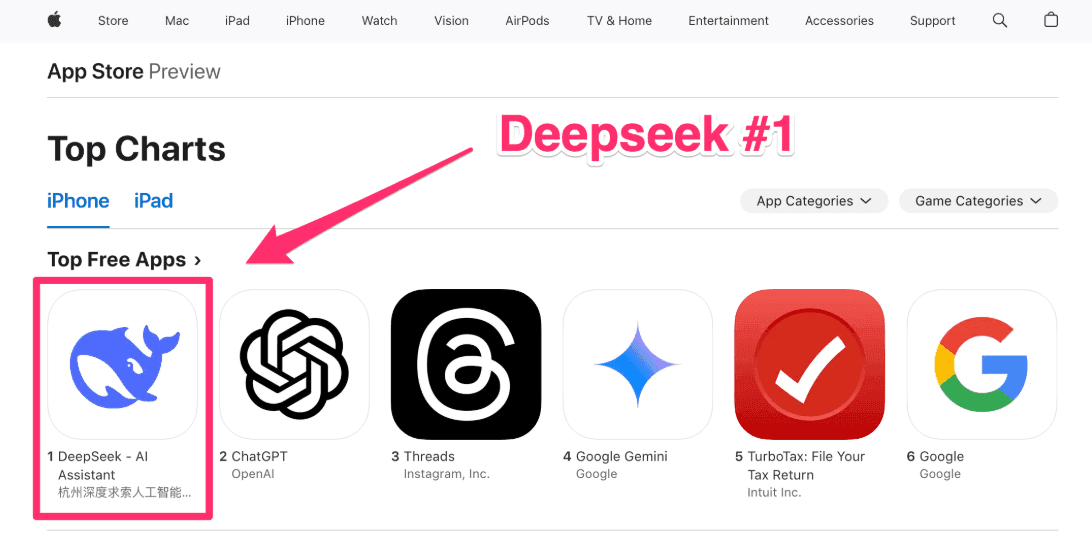

- The app has surpassed ChatGPT in popularity on the iOS App Store.

- The company’s largest user base is in China (39%), followed by the United States (16%) and India (10%).

- ost Advantage: Trains models at 6M/run (vs.ChatGPT′s 6M/run (vs.ChatGPT′s 100M + estimates)

- Market Impact: Caused 17% Nvidia stock drop ($593B loss) post-launch

- Performance: Outperforms GPT-4 in 9/12 benchmarks including coding (82.6 vs 80.5) and math (90.2 vs 74.6)

- Growth Metrics: 584% organic traffic surge, 10M+ downloads, #1 iOS app in US

- Pricing: 80% cheaper than ChatGPT (0.55 vs 0.55 vs 5 per million input tokens)

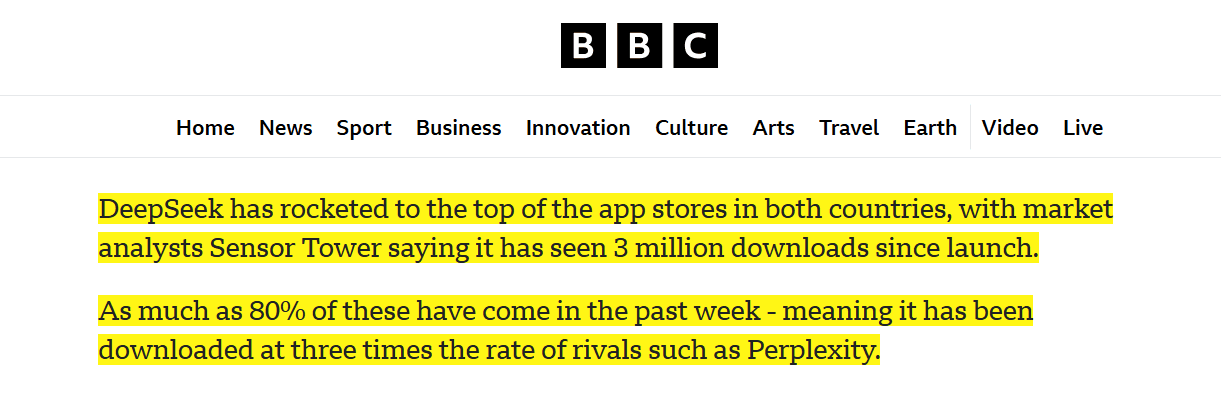

- 80% of DeepSeek’s downloads occurred in just the past week.

- The training cost for DeepSeek-R1 is $6 million per run.

- The token pricing for DeepSeek-R1 is $0.55 per upload and $2.19 per download.

- DeepSeek-V3 has achieved impressive results in AI benchmarks, including a MMLU score of 88.5, DROP (3-shot F1) score of 91.6, HumanEval-Mul score of 82.6, and MATH-500 score of 90.2.

What is DeepSeek?

DeepSeek is a Chinese AI company specializing in open-source large language models (LLMs).

This Chinese AI app was founded in 2023 and backed by the hedge fund High-Flyer, it gained prominence in January 2025 when its chatbot app surpassed ChatGPT as the most downloaded free iOS app in the U.S. The company released its first free chatbot app on January 20, 2025.

Who is the Founder of DeepSeek?

The founder and CEO of DeepSeek is Liang Wenfeng, who graduated from Zhejiang University with a degree in computer science in 2007.

After graduation, Liang worked for several years in the financial industry before co-founding High-Flyer in 2015.

How many people work at DeepSeek?

According to Wikipedia, DeepSeek operates with a lean team of fewer than 200 employees, with a focused approach to AI research and development.

How many followers does DeepSeek have?

As of 31st Jan, 2025 DeepSeek has 791.4K Followers.

DeepSeek Important Facts

Here are some important facts about DeepSeek:

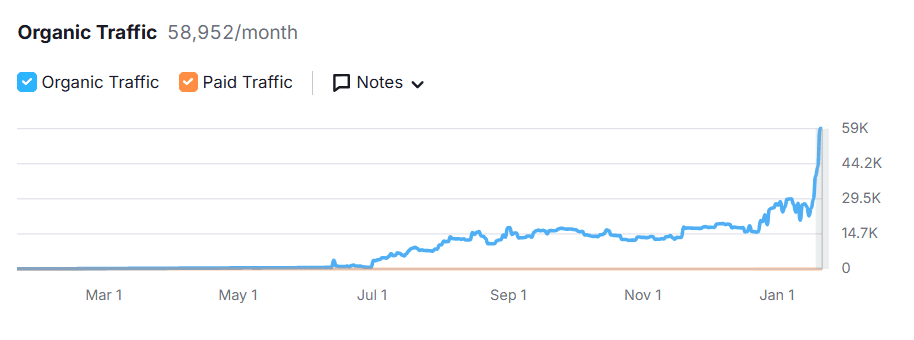

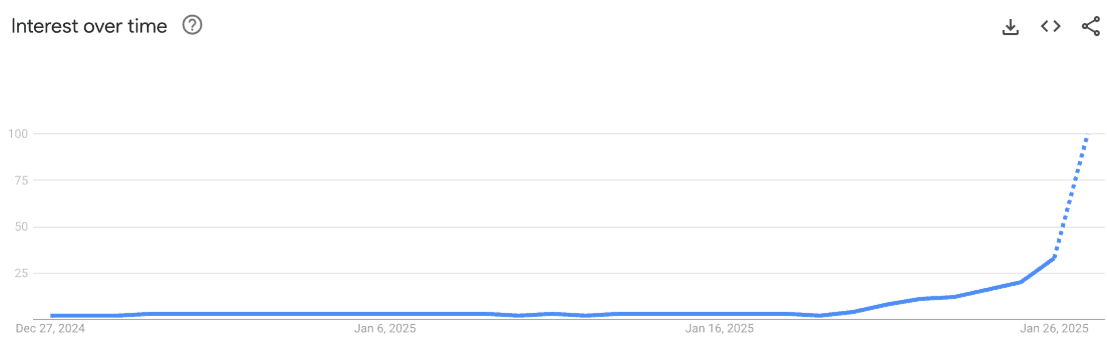

DeepSeek Achieves Massive Growth in Organic Search Traffic

Deepseek has experienced remarkable growth in its organic search traffic in the last 6 months, increasing from 8616 to 58,952, showcasing a significant improvement in visibility and reach across search engines.

The percentage increase in organic search traffic is approximately 584.21%.

How Many Users Does DeepSeek AI Have?

Though there is no official user count data from Deepseek, the search trends and download data show a high user estimation peak.

In the Google Play Store, DeepSeek has more than 1Cr downloads.

In the iOS App Store, DeepSeek is trending as one of the top free apps, surpassing ChatGPT in popularity.

DeepSeek Downloads Statistics

Country Specific Downloads

DeepSeek’s chatbot app has seen a massive surge in global downloads, with China leading the way at 39% of total users.

This is more than double the downloads from the United States (16%) and nearly four times that of India (10%), highlighting China’s strong adoption of the AI tool.

Surge in DeepSeek Downloads in Just One Week

According to an article from BBC, even more surprising, 80% of these downloads happened just in the past week, which is 3 million times downloads from its launch.

This rapid growth shows how quickly people are adopting DeepSeek’s AI technology.

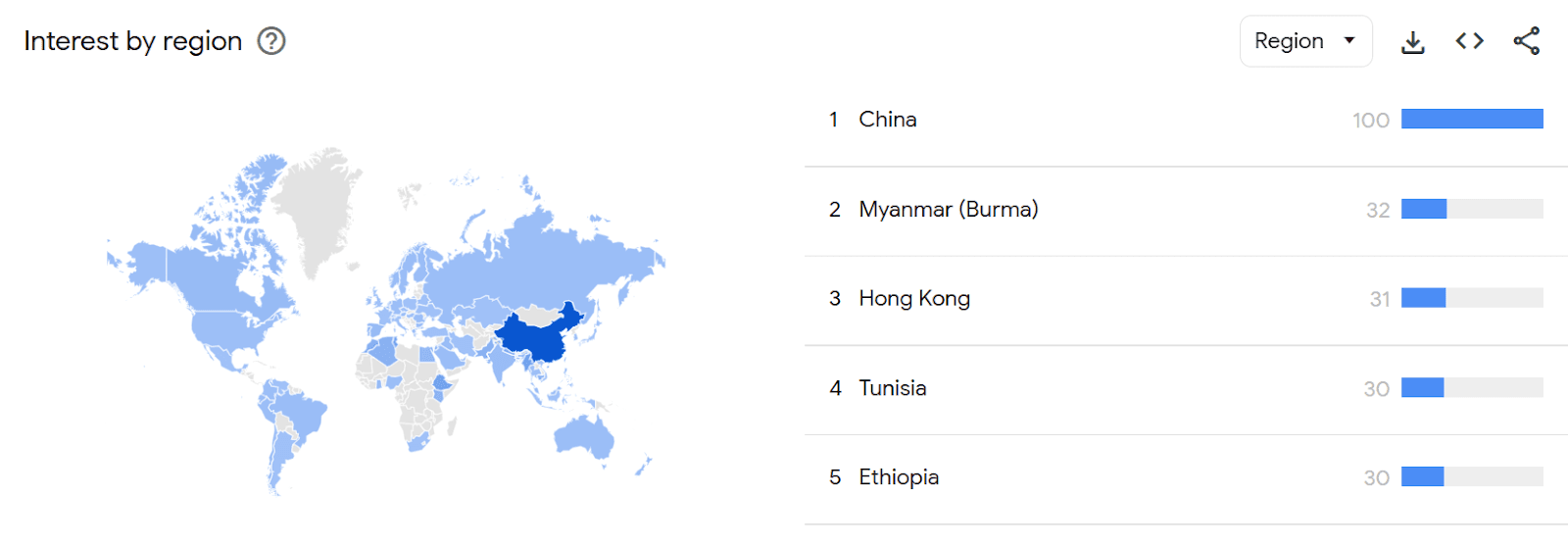

Search Trends of DeepSeek by Region

According to Google Trends data, China shows the highest interest in DeepSeek, with a search volume of 100, indicating its strong engagement with the platform due to its leading AI industry and DeepSeek’s home base.

Myanmar (Burma) follows with a search volume of 32, reflecting a growing curiosity about AI technologies in the region. Other regions like Hong Kong, Tunisia, and Ethiopia also show notable interest, signaling the expanding global adoption of AI tools like DeepSeek.

DeepSeek-R1 Shakes AI Market

How much did Nvidia lose because of DeepSeek?

After the launch of DeepSeek-R1, Nvidia’s stock dropped by 17% which is close to $593 billion in market value. This was because DeepSeek demonstrated a high-performing AI model could be developed at a significantly lower cost compared to its competitors.

This raised concerns about the future demand for expensive AI chips, especially those produced by Nvidia, and the profitability of companies reliant on these chips for AI model development and operation.

DeepSeek Upsets the AI Market with Lower AI Training Costs

DeepSeek Training

DeepSeek-R1 was trained for just $6 million per run, which is considerably less than the estimated costs for models like ChatGPT and Gemini.

The AI model was trained on an impressive 14.8 trillion tokens and required 2.788 million H800 GPU hours for the training process.

DeepSeek vs ChatGpt

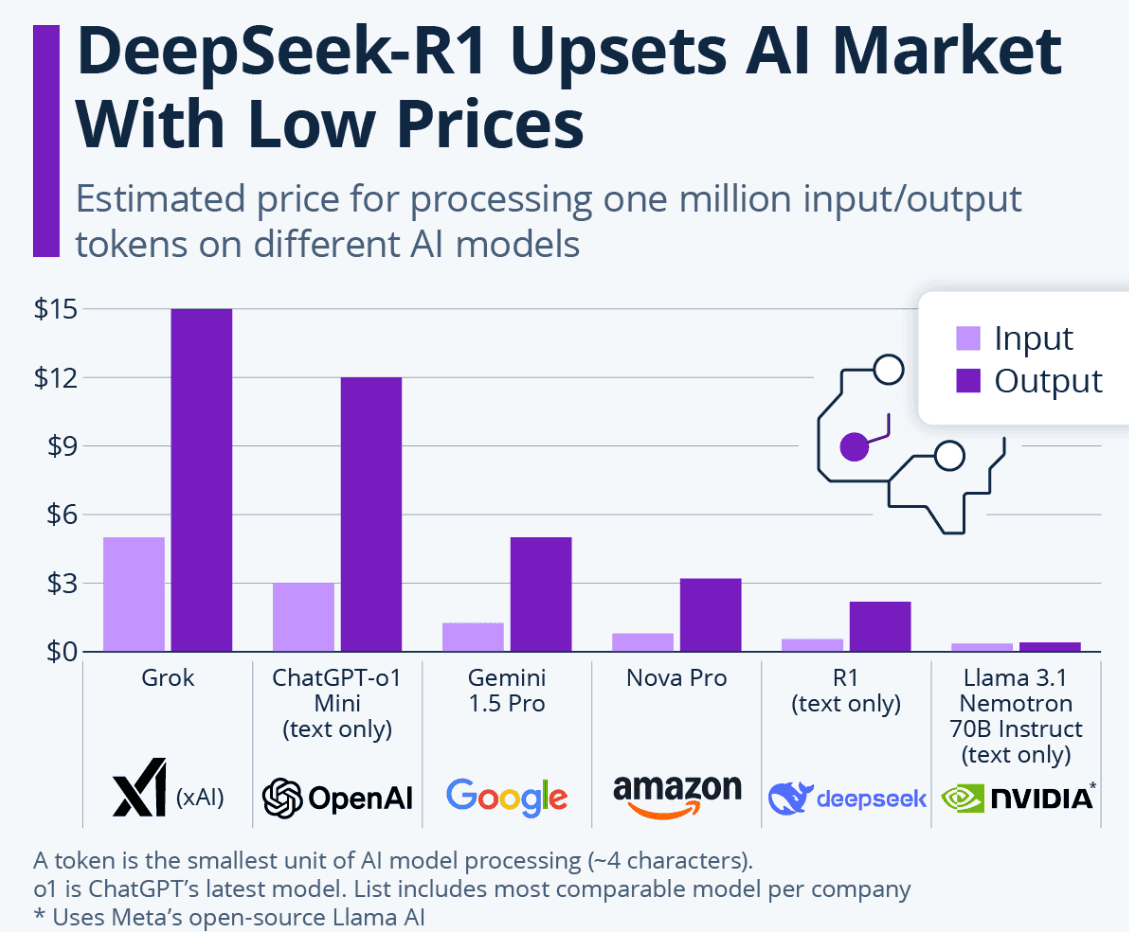

DeepSeek-R1 is significantly cheaper than ChatGPT-o1 Mini in both uploading and downloading tokens. For example, DeepSeek-R1’s upload cost is less than 1/5th of ChatGPT-o1 Mini’s, and the download cost is nearly half the price.

Pricing Comparison (Per 1 Million Tokens)

Similarly, DeepSeek-R1 is roughly is roughly 1.8x cheaper compared to Claude 3.5 Haiku for input and output tokens.

Comparison between other AI Models for processing Input/output Tokens

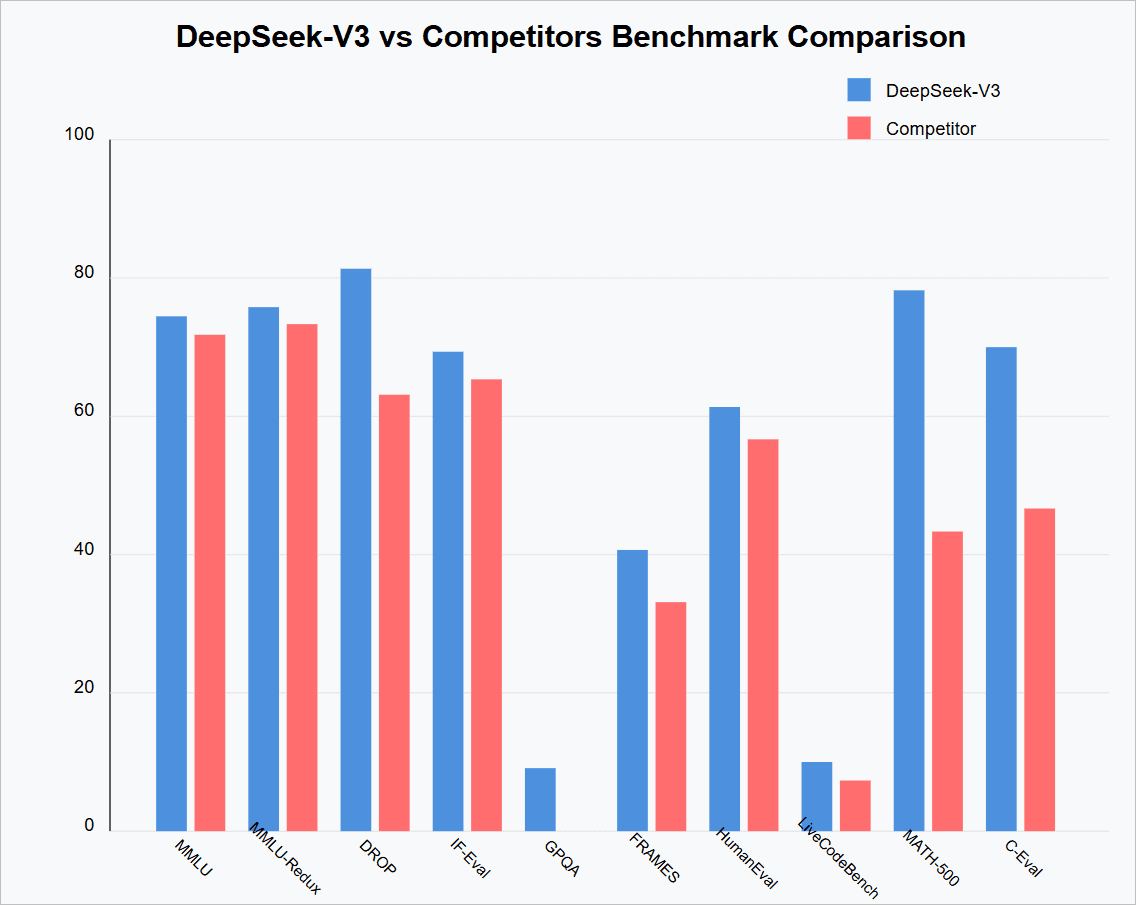

Comparison of Performance with Other Competitors Across Different Benchmarks

DeepSeek-V3 has achieved remarkable results across a wide range of benchmarks, outperforming both its predecessors and competing models, according to DeeSeek’s claim. Here are some highlights:

Architecture and Design (Smart Design)

DeepSeek-V3 employs a Mixture of Experts (MoE) architecture that activates 37B out of 671B parameters, optimizing efficiency through specialized task handling.

Interesting Stat: Uses just 5.5% of total parameters for any given task while maintaining superior performance

Language Understanding (Basic Language Tasks)

Performance comparison in core language tasks:

Takeaway: Shows consistent improvement over GPT-4, with the largest gain of 3.3 points in complex reasoning tasks (MMLU-Pro)

Reading & Comprehension (Reading Skills)

Comprehensive analysis of text understanding capabilities:

Takeaway: Achieves nearly 8-point improvement in reading comprehension (DROP) over GPT-4

Technical Capabilities (Technical Abilities)

Performance in specialized technical tasks:

Takeaway: Shows remarkable 15.6-point improvement in mathematical problem-solving

Multilingual Capabilities (Language Range)

Chinese language performance comparison:

Takeaway: Demonstrates a significant 10.5-point lead in Chinese language understanding

Here's a performance comparison between DeepSeek and its competitors:

Chinese language performance of DeepSeek R-1model and similar models in 2025, by benchmark

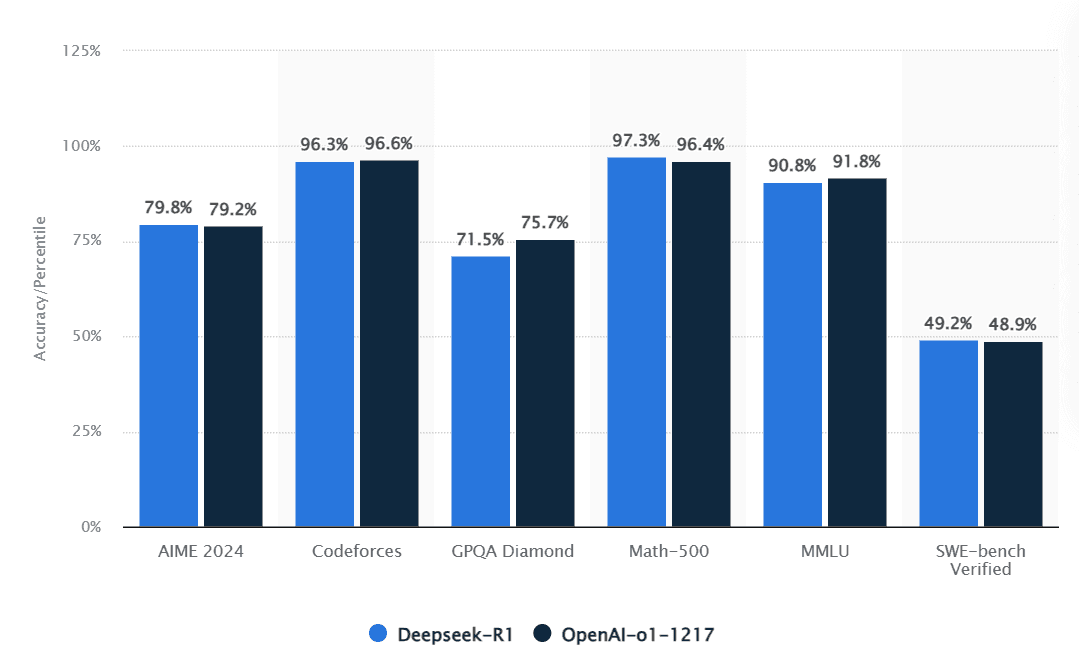

Performance comparison of DeepSeek R-1 and OpenAI-o1-1217 in 2025, by specific benchmarks

In a performance comparison in 2025, DeepSeek's AI model DeepSeek-R1 showed remarkable performance, showing results on par with OpenAI's OpenAI-01-1217 model.

This result has garnered a lot of attention because DeepSeek's model is considered to be notably more efficient and was trained with a significantly lower budget.

What’s next for DeepSeek

DeepSeek is growing fast and changing the AI world with its powerful and affordable models. It outperforms big competitors, lowers AI training costs, and attracts millions of users. As it expands worldwide, DeepSeek will likely improve its technology, create smarter AI, and bring AI tools to more people and businesses.

With its smart design and low prices, DeepSeek is set to shape the future of AI and make advanced technology more available to everyone.

Data Sources:

- https://www.deepseek.com/

- https://www.statista.com/chart/33839/prices-for-processing-one-million-input-output-tokens-on-different-ai-models/

- https://seo.ai/blog/deepseek-users-downloads

- https://www.statista.com/statistics/1552890/deepseek-performance-of-deepseek-r1-compared-to-similar-models-by-chinese-benchmark/

- https://www.statista.com/statistics/1552824/deepseek-performance-of-deepseek-r1-compared-to-open-ai-by-benchmark/

- https://docsbot.ai/models/compare/o1-mini/deepseek-r1

- https://www.bbc.com/news/articles/cx2k7r5nrvpo

- https://appmagic.rocks/product-deck

- https://www.businessofapps.com/data/deepseek-statistics/

- https://www.demandsage.com/deepseek-statistics/

- https://docsbot.ai/models/deepseek-v3

- https://en.wikipedia.org/wiki/DeepSeek

- https://trends.google.com/trends/explore?date=today%201-m&geo=IN&q=DeepSeek&hl=en

Expertise Delivered Straight to Your Inbox

Expertise Delivered Straight to Your Inbox

Got an idea on your mind?

We’d love to hear about your brand, your visions, current challenges, even if you’re not sure what your next step is.

Let’s talk